The demand for cloud computing solutions has skyrocketed in recent years as businesses of all sizes strive to harness the power of the cloud to streamline operations and improve overall efficiency. However, simply moving to the cloud is not enough; optimizing and tuning cloud performance is crucial to ensure maximum efficiency and cost-effectiveness. In this comprehensive guide, we will delve into the world of cloud performance optimization and tuning, exploring the strategies and techniques that can help businesses achieve peak performance in the digital era.

Understanding Cloud Performance Metrics

Summary: This section provides an overview of the key performance metrics used to evaluate cloud performance, such as response time, throughput, and latency. It explains how to interpret these metrics and the impact they have on overall performance.

Response Time

Response time is a critical performance metric that measures the time it takes for a cloud system to respond to a user’s request. A low response time is desirable as it ensures a seamless user experience. Factors that can impact response time include network latency, server processing time, and the complexity of the requested task. To optimize response time, businesses should focus on reducing network latency, optimizing server performance, and implementing efficient algorithms.

Throughput

Throughput refers to the amount of work a cloud system can handle within a given timeframe. It is measured in terms of requests processed per second or data transferred per second. High throughput is essential for handling a large number of user requests and ensuring timely data delivery. To optimize throughput, businesses should focus on network optimization, load balancing, and efficient resource allocation.

Latency

Latency measures the time it takes for data packets to travel from the user to the cloud server and back. High latency can result in slow response times and poor user experience. Factors that can contribute to latency include network congestion, geographical distance between users and servers, and inefficient routing. To optimize latency, businesses should consider implementing content delivery networks (CDNs), leveraging edge computing, and strategically locating their cloud servers.

By monitoring and analyzing these performance metrics, businesses can gain valuable insights into the overall health and efficiency of their cloud systems. This knowledge enables them to identify areas for improvement and make data-driven decisions to optimize performance.

Identifying Performance Bottlenecks in the Cloud

Summary: This section explores the common performance bottlenecks that can hinder cloud performance, such as network congestion, resource limitations, and inefficient application design. It provides insights into how to identify and address these bottlenecks effectively.

Network Congestion

Network congestion occurs when the network infrastructure is overloaded with traffic, resulting in delays and reduced performance. Identifying network congestion requires monitoring network traffic and analyzing performance metrics such as packet loss and network utilization. To address network congestion, businesses can implement traffic shaping techniques, upgrade network bandwidth, or use quality of service (QoS) mechanisms to prioritize critical traffic.

Resource Limitations

Inadequate resources, such as CPU, memory, or storage, can become performance bottlenecks in the cloud. Monitoring resource utilization and performance metrics, such as CPU usage and memory usage, can help identify resource limitations. To address resource constraints, businesses can scale up their cloud infrastructure by adding more resources or optimize resource allocation by implementing load balancing and auto-scaling mechanisms.

Inefficient Application Design

Poorly designed applications can have a significant impact on cloud performance. Common issues include inefficient algorithms, excessive database queries, and lack of caching mechanisms. Conducting code reviews, profiling application performance, and analyzing database queries can help identify areas for improvement. By optimizing application design, businesses can reduce resource usage, improve response times, and enhance overall performance.

Third-Party Dependencies

Many cloud-based applications rely on third-party services and APIs. Performance issues with these dependencies can affect the overall performance of the application. Monitoring the response times and availability of third-party services can help identify performance bottlenecks. To mitigate these issues, businesses can implement failover mechanisms, utilize caching for third-party responses, and consider alternative providers if necessary.

By proactively identifying and addressing performance bottlenecks, businesses can optimize cloud performance and ensure smooth operations. Regular monitoring and analysis are crucial to staying ahead of potential issues and continuously improving the performance of their cloud systems.

Leveraging Automated Performance Monitoring Tools

Summary: This section discusses the importance of automated performance monitoring tools and how they can help businesses proactively detect and resolve performance issues. It highlights some of the top performance monitoring tools available in the market today.

Why Automated Performance Monitoring?

Manual monitoring of cloud performance can be time-consuming and prone to human error. Automated performance monitoring tools enable businesses to continuously monitor key performance metrics and receive real-time alerts when performance issues arise. These tools provide valuable insights into the health of cloud systems, enabling businesses to identify and resolve performance issues before they impact users.

Top Performance Monitoring Tools

There are numerous performance monitoring tools available in the market, each offering unique features and capabilities. Some of the top tools include:

1. New Relic

New Relic is a comprehensive performance monitoring tool that offers real-time visibility into application performance, infrastructure, and user experience. It provides detailed performance metrics, error tracking, and automated root cause analysis.

2. Datadog

Datadog is a cloud monitoring platform that offers comprehensive performance monitoring, log management, and application performance management. It provides real-time insights into infrastructure and application performance and allows for proactive performance optimization.

3. Dynatrace

Dynatrace is an AI-powered performance monitoring tool that offers end-to-end visibility into application performance and user experience. It uses advanced analytics to identify performance bottlenecks and provides actionable insights for optimization.

4. AppDynamics

AppDynamics is a performance monitoring and application intelligence tool that provides real-time visibility into application performance and business metrics. It offers advanced diagnostics and analytics to optimize application performance and ensure a seamless user experience.

These are just a few examples of the performance monitoring tools available in the market. When selecting a tool, businesses should consider their specific requirements, scalability, ease of use, and integration capabilities with their existing cloud infrastructure.

Optimizing Cloud Infrastructure for Performance

Summary: This section delves into the various strategies and best practices for optimizing cloud infrastructure to enhance performance. It covers topics such as load balancing, resource allocation, and server configuration.

Load Balancing

Load balancing is a technique used to distribute incoming network traffic across multiple servers to ensure optimal resource utilization and prevent overloading. Load balancers can be implemented at the application or network level. By effectively distributing traffic, load balancing improves response times and enhances overall performance.

Application-Level Load Balancing

Application-level load balancing involves distributing traffic based on specific application requirements. This can be achieved through techniques such as round-robin, least connections, or session affinity. Application-level load balancers typically operate at the application layer of the OSI model.

Network-Level Load Balancing

Network-level load balancing distributes traffic based on network-level protocols, such as HTTP or TCP. This can be achieved through techniques such as round-robin DNS or using dedicated load balancing hardware. Network-level load balancers operate at the transport or network layer of the OSI model.

Resource Allocation

Efficient resource allocation is crucial for optimizing cloud infrastructure performance. Businesses should carefully monitor resource utilization and ensure that resources are allocated based on workload demands. Techniques such as auto-scaling can automatically adjust resource allocation based on predefined thresholds, ensuring optimal performance and cost-effectiveness.

Server Configuration

Proper server configuration plays a vital role in optimizing cloud performance. Businesses should consider factors such as the choice of operating system, network settings, disk configuration, and security configurations. Fine-tuning these configurations can significantly enhance server performance and overall cloud performance.

Storage Optimization

Efficient storage management is key to optimizing cloud performance. Techniques such as data deduplication, compression, and caching can help reduce storage costs and improve data retrieval times. Utilizing distributed file systems and object storage solutions can also enhance scalability and performance.

By implementing these strategies and best practices, businesses can optimize their cloud infrastructure for performance, ensuring that resources are utilized effectively and user experience is enhanced.

Fine-Tuning Application Performance in the Cloud

Summary: This section focuses on optimizing application performance in the cloud, exploring techniques such as caching, database optimization, and code optimization. It provides actionable tips on how to fine-tune applications to deliver optimal performance.

Implementing Caching Mechanisms

Caching is a technique used to store frequently accessed data in memory for faster retrieval. By caching data, businesses can reduce the need for repetitive database queries, improving response times and overall application performance. Caching can be implemented at various levels, such as in-memory caching, database query caching, or content delivery network (CDN) caching.

Database Optimization

Efficient database design and optimization are crucial for application performance. Techniques such as indexing, query optimization

Database Optimization

Efficient database design and optimization are crucial for application performance. Techniques such as indexing, query optimization, and schema design can significantly improve database performance. Businesses should analyze their database queries, identify bottlenecks, and implement optimizations such as creating appropriate indexes, reducing redundant queries, and optimizing database schema.

Code Optimization

Code optimization plays a vital role in fine-tuning application performance. By eliminating unnecessary code, optimizing algorithms, and reducing resource-intensive operations, businesses can improve response times and overall application efficiency. Techniques such as code profiling, refactoring, and using efficient data structures can help identify and optimize performance bottlenecks in the code.

Asynchronous Processing

Using asynchronous processing techniques can enhance application performance by allowing tasks to run concurrently. By leveraging asynchronous programming models, businesses can handle multiple requests simultaneously, improving scalability and response times. Techniques such as event-driven architecture, non-blocking I/O, and message queues can be utilized to implement asynchronous processing effectively.

Data Transfer Optimization

Efficient data transfer is essential for optimizing application performance in the cloud. Techniques such as data compression, data chunking, and parallel processing can help reduce data transfer times and improve overall application responsiveness. Businesses should analyze their data transfer patterns and implement optimization techniques based on their specific requirements.

External Service Optimization

Many cloud-based applications rely on external services, such as APIs or third-party integrations. Optimizing the usage of these services is crucial for application performance. Businesses should analyze their dependencies, minimize unnecessary API calls, and implement caching mechanisms for external service responses. Additionally, monitoring the performance of external services and considering alternative providers can help ensure optimal application performance.

By implementing these fine-tuning techniques, businesses can optimize their applications for maximum performance in the cloud. Continuously monitoring and analyzing performance metrics, along with regular optimization efforts, can help maintain optimal application performance over time.

Scaling for High Performance and Availability

Summary: This section discusses the importance of scaling cloud resources to ensure high performance and availability during peak demand. It explores different scaling strategies, including vertical and horizontal scaling, and offers insights into when and how to implement them.

Vertical Scaling

Vertical scaling involves increasing the capacity of individual resources, such as upgrading to a more powerful server or adding more memory to an existing server. Vertical scaling is suitable when the application’s performance can be improved by increasing the resources of a single server. This approach is often used when there is a need for additional processing power, memory, or storage capacity.

Horizontal Scaling

Horizontal scaling involves adding more instances of resources, such as adding more servers to distribute the workload. Horizontal scaling is suitable when the application’s performance can be improved by distributing the workload across multiple servers. This approach is often used when there is a need for high availability, fault tolerance, and the ability to handle increased traffic loads.

Auto-Scaling

Auto-scaling is a dynamic scaling approach that automatically adjusts resource capacity based on predefined rules and performance metrics. By monitoring application performance and workload demand, auto-scaling mechanisms can add or remove resources as needed, ensuring optimal performance and cost-effectiveness. Auto-scaling is particularly useful for applications with unpredictable or fluctuating traffic patterns.

Elastic Load Balancing

Elastic Load Balancing is a service provided by cloud providers that automatically distributes incoming traffic across multiple instances. By leveraging load balancers, businesses can achieve high availability and scalability. Load balancers can distribute traffic evenly across multiple instances, ensuring optimal resource utilization and avoiding overloading any single instance. Elastic Load Balancing is often used in combination with horizontal scaling to achieve optimal performance and availability.

Database Scaling

Scaling databases is crucial for applications that rely heavily on database operations. Techniques such as sharding, replication, and using distributed databases can help distribute the database workload and improve performance. Scaling databases requires careful planning and consideration of data consistency, replication strategies, and potential performance bottlenecks.

By understanding the different scaling strategies and implementing them appropriately, businesses can ensure high performance and availability for their cloud-based applications. Regular monitoring, load testing, and capacity planning are essential to determine the optimal scaling approach and ensure a seamless user experience.

Implementing Content Delivery Networks (CDNs)

Summary: This section explores the benefits of using content delivery networks (CDNs) to optimize cloud performance. It explains how CDNs work, their impact on latency and throughput, and provides guidance on implementing CDNs effectively.

What is a Content Delivery Network?

A Content Delivery Network (CDN) is a distributed network of servers strategically located around the world. CDNs work by caching and delivering static and dynamic content closer to the end-users, reducing latency and improving overall performance. CDNs achieve this by storing copies of content in multiple locations, allowing users to access the content from a server geographically closer to them.

Benefits of Using a CDN

Implementing a CDN offers several benefits for optimizing cloud performance:

Reduced Latency

By delivering content from servers closer to the users, CDNs significantly reduce latency. This results in faster load times and improved user experience, particularly for geographically distributed audiences.

Improved Throughput

CDNs can handle high volumes of traffic by distributing the load across multiple servers. This improves overall throughput and ensures smooth content delivery even during peak demand periods.

Enhanced Scalability

CDNs are designed to scale easily, allowing businesses to handle sudden spikes in traffic without impacting performance. CDNs can dynamically allocate resources to handle increased demand, ensuring optimal performance and availability.

Global Reach

CDNs have a global presence, allowing businesses to reach audiences worldwide with minimal latency. This is particularly advantageous for businesses that target international markets or have a geographically dispersed customer base.

Implementing a CDN Effectively

To implement a CDN effectively, businesses should consider the following:

Content Caching

Identify the content that should be cached and distributed through the CDN. Static content, such as images, CSS files, and JavaScript files, are typically good candidates for caching. Determine the caching rules and expiration times based on the content update frequency and caching requirements.

CDN Selection

Choose a reliable CDN provider that aligns with the business’s specific requirements. Consider factors such as network coverage, performance, pricing, and support. Evaluate the CDN provider’s ability to integrate with the existing cloud infrastructure and monitor their performance metrics.

Configuration and Integration

Configure the CDN to work seamlessly with the existing cloud infrastructure. This may involve modifying DNS settings, configuring caching rules, and setting up SSL certificates. Ensure proper integration with load balancers and origin servers to ensure smooth content delivery.

Monitoring and Optimization

Regularly monitor CDN performance and analyze performance metrics such as cache hit rate, latency, and throughput. Optimize caching rules, content delivery paths, and CDN configurations based on performance analysis. Continuously evaluate CDN performance and consider switching providers if necessary.

By leveraging CDNs effectively, businesses can improve cloud performance, reduce latency, and enhance overall user experience. CDNs are an essential tool for businesses operating in the digital era, where fast and reliable content delivery is paramount.

Securing Cloud Performance and Tuning

Summary: This section addresses the crucial aspect of securing cloud performance and tuning. It discusses strategies for protecting against performance-related security threats, such as DDoS attacks, and offers insights into ensuring a secure and high-performing cloud environment.

Securing Cloud Performance

Securing cloud performance involves protecting against threats that can impact performance, availability, and user experience. One significant threat is Distributed Denial of Service (DDoS) attacks, where attackers flood a system or network with excessive traffic, causing a performance degradation or even complete service outage. To secure cloud performance, businesses should consider the following strategies:

DDoS Mitigation

Implementing DDoS mitigation strategies is crucial to protect cloud performance. This includes deploying DDoS protection services, such as traffic filtering, rate limiting, and anomaly detection. Employing a multi-layered defense approach, including both network and application-level protections, can help mitigate the impact of DDoS attacks.

Network Security

Ensuring robust network security is essential for maintaining cloud performance. Implementing firewalls, intrusion detection systems (IDS), and intrusion prevention systems (IPS) can help protect against unauthorized access and malicious activities. Regular network security audits and vulnerability assessments should be conducted to identify and address potential security risks.

Data Encryption

Encrypting sensitive data is crucial to protect against data breaches and maintain cloud performance. Implementing encryption protocols and using secure communication channels, such as SSL/TLS, can help ensure data confidentiality and integrity. Businesses should also consider encrypting data at rest, utilizing techniques such as disk encryption or database encryption.

Monitoring and Performance Optimization

Monitoring cloud performance is vital for identifying performance-related security threats and ensuring a secure environment. Businesses should implement robust performance monitoring tools and strategies to detect anomalies, identify potential security breaches

Monitoring and Performance Optimization

Monitoring cloud performance is vital for identifying performance-related security threats and ensuring a secure environment. Businesses should implement robust performance monitoring tools and strategies to detect anomalies, identify potential security breaches, and optimize performance. This includes monitoring network traffic, server logs, and application-level performance metrics. Regular performance audits and vulnerability assessments should also be conducted to proactively identify and address security vulnerabilities.

Security Audits and Penetration Testing

Regular security audits and penetration testing are essential for maintaining a secure and high-performing cloud environment. Security audits assess the overall security posture, identify vulnerabilities, and recommend remediation actions. Penetration testing involves simulated attacks to uncover weaknesses in the cloud infrastructure and applications. By conducting these tests, businesses can identify and address security vulnerabilities before they are exploited.

Identity and Access Management

Implementing strong identity and access management (IAM) practices is critical for securing cloud performance. Businesses should enforce strict access controls, ensure the use of strong passwords, and implement multi-factor authentication. Regularly review and update user access permissions, revoke access for inactive users, and implement least privilege principles to minimize the risk of unauthorized access.

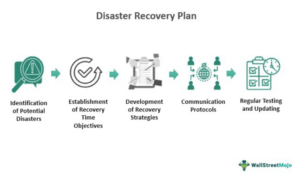

Disaster Recovery and Business Continuity

Having a robust disaster recovery and business continuity plan is essential for maintaining cloud performance and ensuring uninterrupted operations. This includes regular backups, redundant systems, failover mechanisms, and the ability to quickly recover from system failures or security incidents. Regularly test and update the disaster recovery plan to ensure its effectiveness in real-world scenarios.

Employee Training and Awareness

Employee training and awareness play a vital role in maintaining a secure and high-performing cloud environment. Businesses should educate employees about security best practices, such as identifying phishing attempts, using secure passwords, and reporting suspicious activities. Regular security awareness training and updates can help mitigate the risk of human error leading to security breaches.

By implementing robust security measures, conducting regular audits and testing, and maintaining a strong focus on performance optimization, businesses can ensure a secure and high-performing cloud environment. Securing cloud performance is an ongoing effort that requires constant monitoring, adaptation to evolving threats, and continuous improvement.

Continuous Performance Optimization and Testing

Summary: This section emphasizes the importance of continuous performance optimization and testing in the cloud. It explores the concept of DevOps and how it can be leveraged to continuously monitor, test, and optimize cloud performance.

The Role of DevOps in Performance Optimization

DevOps is a collaborative approach that combines development, operations, and quality assurance to enhance software delivery and overall performance. In the context of cloud performance optimization, DevOps plays a crucial role in ensuring continuous monitoring, testing, and optimization of cloud systems.

Continuous Monitoring

Continuous monitoring involves the use of performance monitoring tools to collect and analyze real-time data on cloud performance. By continuously monitoring key performance metrics, businesses can proactively identify performance issues, bottlenecks, and security threats. Continuous monitoring allows for prompt corrective actions and optimization efforts to maintain optimal performance.

Continuous Testing

Continuous testing ensures that applications and infrastructure are thoroughly tested throughout the development lifecycle. By automating testing processes and integrating them into the continuous integration and continuous delivery (CI/CD) pipeline, businesses can identify performance issues, vulnerabilities, and compatibility problems early on. Continuous testing enables businesses to address these issues promptly, reducing the risk of performance degradation in production environments.

Load Testing and Performance Benchmarking

Load testing and performance benchmarking are essential components of continuous performance optimization. Load testing involves simulating high traffic scenarios to determine the performance limits of cloud systems. Performance benchmarking compares the performance of cloud systems against industry standards and best practices. By regularly conducting load testing and performance benchmarking, businesses can identify performance bottlenecks and optimize their cloud infrastructure and applications accordingly.

Optimization Iterations

Continuous performance optimization requires an iterative approach. By analyzing performance data, identifying areas for improvement, and implementing optimization strategies, businesses can continuously enhance the performance of their cloud systems. This involves fine-tuning configurations, optimizing code, adjusting resource allocation, and adopting new technologies or techniques that align with business goals and performance requirements.

Collaboration and Communication

Effective collaboration and communication between development, operations, and quality assurance teams are crucial for successful continuous performance optimization. By fostering a culture of collaboration, sharing performance data, and encouraging feedback, businesses can ensure that optimization efforts are aligned with business objectives and that all stakeholders are involved in the optimization process.

Continuous performance optimization is an ongoing effort that requires a proactive approach, the use of appropriate tools and techniques, and a commitment to continuous improvement. By embracing DevOps principles and integrating performance optimization into the development and operations lifecycle, businesses can achieve and maintain optimal cloud performance.

Future Trends in Cloud Performance Optimization

Summary: This section provides a glimpse into the future of cloud performance optimization, discussing emerging technologies and trends that will shape the landscape. It offers insights into how businesses can stay ahead by embracing these advancements.

Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) are poised to revolutionize cloud performance optimization. AI and ML algorithms can analyze vast amounts of performance data, identify patterns, and make intelligent decisions to optimize cloud systems. These technologies can automate performance tuning, predict potential issues, and dynamically adjust resource allocation based on workload demands. Businesses that leverage AI and ML in their performance optimization strategies can achieve higher levels of efficiency and responsiveness.

Serverless Computing

Serverless computing, also known as Function-as-a-Service (FaaS), eliminates the need for managing servers and infrastructure. With serverless architectures, businesses can focus solely on developing and optimizing application code. Cloud providers manage the underlying infrastructure, automatically scaling resources based on demand. Serverless computing offers the potential for improved performance, scalability, and cost-effectiveness, as resources are allocated dynamically and on-demand.

Edge Computing

Edge computing brings computation and data storage closer to the devices or users that generate or consume the data. By processing data at the edge of the network, closer to the source, edge computing reduces latency and improves performance for applications that require real-time responsiveness. Edge computing can complement cloud computing by offloading processing tasks to local edge devices, enhancing performance and reducing dependence on centralized cloud resources.

Containerization and Orchestration

Containerization technologies such as Docker and orchestration platforms like Kubernetes have revolutionized application deployment and management. Containers provide lightweight and isolated environments for running applications, allowing for efficient resource utilization and faster deployment. Orchestration platforms enable the management and scaling of containerized applications across multiple servers. By adopting containerization and orchestration, businesses can optimize performance, enhance scalability, and streamline application management processes.

Quantum Computing

Quantum computing, although still in its early stages, has the potential to disrupt cloud performance optimization. Quantum computers can solve complex problems exponentially faster than traditional computers, opening up new possibilities for optimization algorithms and performance modeling. As quantum computing advances, businesses may leverage its power to optimize cloud performance and tackle complex performance-related challenges.

As technology continues to evolve, businesses must stay informed about emerging trends in cloud performance optimization. By embracing these advancements and adapting their strategies accordingly, businesses can stay ahead of the competition and deliver exceptional performance in the ever-changing digital landscape.