Welcome to our comprehensive guide on fog computing architecture, a groundbreaking technology that is set to revolutionize the future of computing. In this article, we will delve deep into the intricate details of fog computing, exploring its key components, advantages, and real-world applications. Whether you are a tech enthusiast, a business owner, or simply curious about the latest advancements in the world of technology, this article will provide you with all the information you need to understand and appreciate the power of fog computing.

Understanding Fog Computing

In today’s data-driven world, where billions of devices are constantly connected and generating massive amounts of data, fog computing has emerged as a game-changer. Fog computing, also known as edge computing, is a decentralized computing architecture that brings storage, compute, and networking resources closer to the edge devices, such as smartphones, IoT devices, and sensors, rather than relying solely on centralized cloud infrastructure. By distributing computing power, fog computing enables real-time data analysis and faster decision-making, overcoming the latency and bandwidth limitations of traditional cloud computing.

Edge Computing: The Foundation of Fog Computing

At the heart of fog computing lies the concept of edge computing. Edge computing refers to the practice of processing and analyzing data at or near the source, rather than sending it to a centralized cloud for computation. By leveraging edge devices’ computational capabilities, fog computing reduces the data transfer latency and bandwidth requirements, enabling faster response times and improved overall system performance.

The Role of Fog Nodes

In a fog computing architecture, fog nodes play a crucial role in enabling efficient data processing and communication. Fog nodes are distributed computing resources located at the network edge, closer to the edge devices. These nodes serve as intermediaries between the edge devices and the cloud, providing local storage, computation, and networking capabilities. By leveraging fog nodes, fog computing minimizes the need for data transmission to the cloud, reducing latency and bandwidth consumption.

Key Components of Fog Computing

To fully understand fog computing, it’s essential to explore its key components and how they work together to create a robust architecture. Let’s take a closer look at the fundamental elements that make up a fog computing system.

Edge Devices

Edge devices, such as smartphones, wearables, and IoT sensors, are the endpoints that generate and collect data in a fog computing ecosystem. These devices act as data sources and interact directly with the users or the physical environment. With their increasing computational capabilities, edge devices can perform basic data processing tasks and communicate with fog nodes for more complex computations.

Fog Nodes

Fog nodes are distributed computing resources located at the network edge. These nodes act as intermediaries between the edge devices and the cloud, providing local storage, compute power, and networking capabilities. Fog nodes are responsible for processing data at the edge, enabling real-time analytics, and reducing the need for data transmission to centralized cloud servers.

Fog Orchestration and Management

Fog orchestration and management are critical components that ensure the efficient operation of a fog computing architecture. Fog orchestration involves the coordination and allocation of resources across multiple fog nodes, enabling workload distribution and efficient task execution. Fog management encompasses various tasks, including monitoring node performance, resource provisioning, and security management.

Fog-to-Cloud Integration

While fog computing enables localized data processing, there are situations where data needs to be shared with the cloud for further analysis or long-term storage. Fog-to-cloud integration allows seamless communication and data transfer between fog nodes and cloud servers, ensuring a cohesive architecture that combines the benefits of both fog computing and cloud computing.

Data Analytics and Machine Learning

Data analytics and machine learning algorithms play a crucial role in extracting valuable insights from the vast amount of data generated by edge devices. Fog computing enables real-time data analysis at the edge, allowing organizations to derive actionable intelligence and make informed decisions without relying solely on centralized cloud infrastructure.

Advantages of Fog Computing

Fog computing offers several advantages over traditional cloud computing, making it a compelling choice for various applications. Let’s explore some of the key benefits that fog computing brings to the table.

Reduced Latency

One of the primary advantages of fog computing is reduced latency. By bringing computing resources closer to the edge devices, fog computing minimizes the time it takes for data to travel between the source and the processing nodes. This near-real-time processing enables faster response times and enhances the overall user experience, particularly in applications where low latency is critical, such as autonomous vehicles or real-time monitoring systems.

Improved Network Efficiency

With fog computing, organizations can offload data processing tasks from the cloud to the edge, reducing the amount of network traffic and bandwidth consumption. By distributing the computational load, fog computing optimizes network efficiency and minimizes the strain on centralized cloud infrastructure. This approach is particularly beneficial in scenarios where network connectivity is limited or unreliable, ensuring uninterrupted operations even in challenging environments.

Enhanced Security and Privacy

Fog computing enhances security and privacy by keeping sensitive data closer to the source, reducing the risk of data breaches during transmission to the cloud. With fog computing, organizations can implement localized security measures, such as encryption and access control, at the edge, ensuring data protection and compliance with privacy regulations. Additionally, fog computing enables data anonymization and aggregation, preserving privacy while still deriving valuable insights from the collected data.

Scalability and Flexibility

Scalability is a crucial aspect of any computing architecture, and fog computing offers inherent scalability and flexibility. By distributing computational resources across multiple fog nodes, organizations can easily scale their infrastructure to accommodate changing demands, without relying solely on centralized cloud servers. This flexibility allows for efficient resource allocation and ensures optimal performance even as the number of edge devices and data sources grows.

Real-World Applications of Fog Computing

Fog computing has gained significant traction across various industries, transforming the way organizations operate and deliver services. Let’s explore some of the real-world applications where fog computing is making a substantial impact.

Smart Cities

In smart city initiatives, fog computing plays a vital role in enabling real-time data analysis and decision-making at the edge. With the deployment of sensors and IoT devices throughout the city, fog computing allows for efficient management of resources, traffic optimization, and improved public safety. From smart street lighting and waste management to intelligent transportation systems, fog computing is revolutionizing the way cities operate, making them more sustainable and livable.

Healthcare

Fog computing has immense potential in the healthcare industry, where timely access to patient data and real-time monitoring are critical. By leveraging fog computing, healthcare providers can ensure continuous patient monitoring, reduce response times, and enable remote diagnostics and telemedicine. Fog computing also enhances the security and privacy of sensitive medical data, allowing for secure and efficient data sharing among healthcare professionals.

Manufacturing

Manufacturing facilities are increasingly adopting fog computing to optimize their operations and improve productivity. By deploying edge devices and fog nodes on the factory floor, manufacturers can collect and process data in real-time, enabling predictive maintenance, quality control, and process optimization. Fog computing also facilitates seamless integration of Internet of Things (IoT) devices and machinery, creating a connected and intelligent manufacturing environment.

Transportation

In the transportation sector, fog computing is revolutionizing various aspects, from connected vehicles to intelligent traffic management systems. By leveraging fog computing, vehicles can access real-time traffic data, enabling efficient route planning and reducing congestion. Fog computing also empowers autonomous vehicles by enabling onboard data processing and decision-making, ensuring safe and reliable operations.

Energy Management

Fog computing plays a crucial role in optimizing energy management systems by providing real-time data analytics and control at the edge. With fog computing, energy providers can monitor and control energy generation, distribution, and consumption in a more granular and efficient manner. This enables better load balancing, demand response, and integration of renewable energy sources, contributing to a more sustainable and resilient energy infrastructure.

Challenges and Limitations of Fog Computing

While fog computing offers immense potential, it also comes with its fair share of challenges and limitations. Let’s explore some of the key obstacles that need to be addressed for widespread adoption.

Network Connectivity and Interoperability

Fog computing heavily relies on reliable network connectivity between edge devices, fog nodes, and cloud servers. In scenarios where network connectivity is limited or unreliable, maintaining seamless communication and synchronization becomes a challenge. Interoperability among different fog nodes and edge devices also needs to be addressed to ensure compatibility and smooth integration.

Resource Constraints

Edge devices often have limited computational power, memory, and storage capacities. This resource constraint poses challenges for deploying complex applications and performing resource-intensive tasks at the edge. Optimizing resource allocation and workload distribution across fog nodes becomes crucial to ensure efficient utilization of available resources.

Security and Privacy Risks

With distributed computing resources and data processing at the edge, fog computing introduces new security and privacy challenges. Securing edge devices and fog nodes from physical and cyber threats is crucial to prevent unauthorized access and data breaches. Privacy concerns also arise when processing sensitive data at the edge, necessitating robust encryption and access control mechanisms.

Scalability and Management Complexity

As the number of edge devices and data sources increases, managing and scaling a fog computing architecture can become complex. Coordinating and orchestrating resources across multiple fog nodes, ensuring workload distribution, and maintaining system performance requires efficient management strategies and tools.

Reliability and Fault Tolerance

Reliability and fault tolerance are critical considerations in fog computing, particularly in mission-critical applications. Since fog computing relies on a distributed architecture, failures in individual fog nodes or edge devices should not disrupt the overall system functionality. Implementing redundancy, fault detection, and recovery mechanisms is essential to ensure uninterrupted operations and minimize downtime.

Standardization and Compatibility

The fog computing ecosystem involves a wide range of devices, protocols, and platforms. Establishing industry standards and ensuring compatibility among different vendors’ products is crucial for seamless integration and interoperability. Standardization efforts are necessary to facilitate the widespread adoption of fog computing and foster collaboration among various stakeholders.

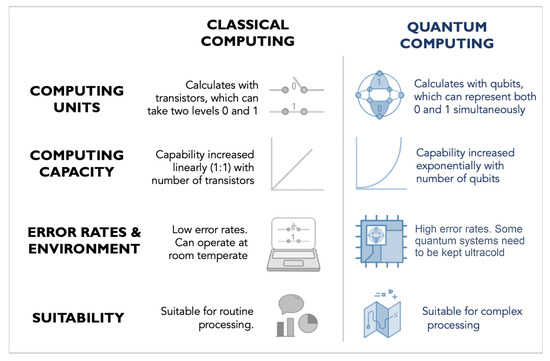

Fog Computing vs. Cloud Computing

While fog computing and cloud computing share similarities, they are distinct in their architecture and application scenarios. Let’s explore the key differences and similarities between these two computing paradigms.

Data Processing and Storage

In cloud computing, data processing and storage primarily occur in centralized data centers. Fog computing, on the other hand, leverages distributed resources at the network edge for localized processing. While cloud computing offers virtually unlimited storage and computing power, fog computing provides real-time processing and reduced latency by bringing computation closer to the data source.

Latency and Bandwidth

Cloud computing relies on data transmission to and from centralized servers, which can introduce latency and consume significant bandwidth. Fog computing minimizes latency by processing data locally at the edge, enabling faster response times and reducing the need for extensive data transfer. This makes fog computing suitable for applications that require real-time analytics and low-latency interactions.

Scalability and Flexibility

Cloud computing offers high scalability, allowing organizations to scale their resources up or down based on demand. However, it relies on centralized data centers for resource provisioning. Fog computing, on the other hand, provides inherent scalability by distributing resources across multiple edge nodes. This distributed architecture enables efficient resource allocation and makes fog computing more flexible in scenarios where edge devices and data sources vary in number and location.

Applications and Use Cases

Cloud computing is well-suited for applications that require massive storage, complex computations, and long-term data analysis. Fog computing, on the other hand, excels in applications that demand real-time analytics, low-latency communication, and edge-based decision-making. Fog computing finds its applications in industries such as transportation, healthcare, manufacturing, and smart cities, where localized processing and immediate response are critical.

Complementary Nature

Cloud computing and fog computing are not mutually exclusive but rather complementary in certain scenarios. Organizations can leverage both paradigms to create hybrid architectures that combine the benefits of centralized cloud infrastructure with localized edge processing. Fog computing can offload data processing tasks from the cloud, reducing latency and optimizing network bandwidth, while cloud computing can provide the scalability and storage capacity needed for long-term data analysis and resource-intensive computations.

Security and Privacy Considerations in Fog Computing

Security and privacy are paramount in any computing architecture, and fog computing is no exception. Let’s explore the unique security and privacy considerations that arise in fog computing and the measures that can be taken to mitigate potential risks.

Securing Edge Devices and Fog Nodes

Edge devices and fog nodes are potential targets for physical and cyber-attacks, making their security a top priority. Implementing robust authentication mechanisms, encryption protocols, and access control measures is essential to prevent unauthorized access and data breaches. Regular security updates, patches, and monitoring of edge devices and fog nodes are also crucial to maintain a secure computing environment.

Data Encryption and Access Control

With data being processed and stored at the edge, fog computing requires strong encryption mechanisms to protect sensitive information. Encrypting data in transit and at rest ensures that even if unauthorized access occurs, the data remains secure. Access control mechanisms, such as role-based access control and data anonymization techniques, can further safeguard privacy and prevent unauthorized data exposure.

Trustworthiness of Fog Nodes

Ensuring the trustworthiness of fog nodes is essential to maintain the integrity of the fog computing system. Employing secure boot mechanisms, integrity checks, and attestation protocols can verify the authenticity and integrity of fog nodes, preventing malicious entities from compromising the system. Regular monitoring and auditing of fog nodes also play a crucial role in maintaining their trustworthiness.

Data Governance and Compliance

Compliance with data protection regulations, such as the General Data Protection Regulation (GDPR), is critical when processing and storing data in fog computing architectures. Organizations must establish clear data governance policies, including data retention and deletion, consent management, and transparent data usage practices. By adhering to data protection regulations, organizations can ensure privacy compliance while leveraging the benefits of fog computing.

Fog Computing Implementation Strategies

Implementing fog computing requires careful planning and execution. Let’s explore different strategies organizations can adopt when deploying fog computing architecture.

Edge-First Approach

In an edge-first approach, organizations prioritize deploying fog nodes and edge devices across their infrastructure. This strategy ensures that computational resources and data processing capabilities are readily available at the edge, enabling localized decision-making and real-time analytics. By leveraging edge devices’ computational power, organizations can minimize latency and reduce dependence on centralized cloud infrastructure.

Cloud-Integrated Approach

In a cloud-integrated approach, fog computing architecture is designed to work seamlessly with existing cloud infrastructure. Organizations leverage fog computing for localized processing and real-time analytics at the edge, while leveraging the cloud for long-term data storage, resource scalability, and complex computations. This strategy allows for a hybrid architecture that combines the benefits of both paradigms, providing flexibility and scalability.

Collaborative Fog Computing

In a collaborative fog computing approach, multiple organizations collaborate to create a federated fog computing ecosystem. By pooling together resources and sharing computational capabilities, organizations can collectively leverage the benefits of fog computing. Collaborative fog computing enables efficient resource utilization, workload distribution, and enhanced scalability, making it particularly suitable for large-scale deployments or scenarios where resource constraints are a concern.

Application-Aware Fog Computing

Application-aware fog computing focuses on tailoring the fog computing architecture to specific application requirements. By analyzing the unique needs of the application, organizations can design a fog computing system that optimizes resource allocation, data processing, and communication. This approach ensures that the fog computing architecture is aligned with the application’s goals, performance requirements, and scalability needs.

Future Trends and Innovations in Fog Computing

As fog computing continues to evolve, new trends and innovations are emerging, shaping the future of this transformative technology. Let’s explore some of the key areas of research and potential advancements in fog computing.

Artificial Intelligence at the Edge

The integration of artificial intelligence (AI) and machine learning (ML) algorithms with fog computing enables intelligent decision-making at the edge. By deploying AI models directly on fog nodes, organizations can perform complex analytics and predictive tasks in real-time. This integration opens up possibilities for autonomous edge devices, personalized services, and adaptive systems that continuously learn and improve based on local data.

Blockchain and Fog Computing

The combination of blockchain technology and fog computing holds promise for creating secure and decentralized applications. By leveraging the transparency and immutability of blockchain, fog computing architectures can enhance trust, data integrity, and secure communication between edge devices. Blockchain can be used to ensure the authenticity and integrity of data collected and processed at the edge, further strengthening the security and privacy of fog computing systems.

5G and Fog Computing Integration

The advent of 5G networks presents exciting opportunities for fog computing. The high-speed, low-latency capabilities of 5G networks enable seamless communication between edge devices and fog nodes, facilitating real-time data processing and decision-making. The integration of 5G and fog computing paves the way for enhanced smart city initiatives, autonomous vehicles, augmented reality applications, and other latency-sensitive use cases.

Edge-to-Edge Collaboration

Edge-to-edge collaboration refers to the cooperation and coordination between multiple fog nodes at the edge. By sharing resources, data, and computational capabilities, fog nodes can collaborate to solve complex problems and perform distributed computations. This collaboration enables efficient resource utilization, load balancing, and fault tolerance, enhancing the overall performance and scalability of fog computing architectures.

Privacy-Preserving Fog Computing

Privacy-preserving fog computing focuses on developing techniques and algorithms that protect user privacy while still enabling valuable data analysis and insights. Differential privacy, federated learning, and secure multiparty computation are some of the approaches that can be employed to ensure data privacy in fog computing. By preserving privacy at the edge, organizations can build trust and encourage the widespread adoption of fog computing technologies.

In conclusion, fog computing architecture is poised to redefine the way we perceive and interact with technology. Its ability to bring processing power closer to the edge devices opens up endless possibilities for innovation and efficiency. As industries embrace the power of fog computing, we can expect to witness transformative changesacross various sectors. Fog computing offers reduced latency, improved network efficiency, enhanced security and privacy, and scalability, making it an attractive option for a wide range of applications. From smart cities and healthcare to manufacturing and transportation, fog computing is revolutionizing industries and enabling real-time analytics and decision-making at the edge.

However, fog computing also comes with its share of challenges. Network connectivity and interoperability, resource constraints, security risks, reliability, and standardization are some of the key challenges that need to be addressed for widespread adoption. Organizations must implement robust security measures, ensure the trustworthiness of fog nodes, and comply with data protection regulations to mitigate potential risks.

When implementing fog computing, organizations can adopt different strategies based on their requirements. An edge-first approach prioritizes deploying fog nodes and edge devices to enable localized processing and real-time analytics. A cloud-integrated approach combines fog computing with centralized cloud infrastructure, leveraging the benefits of both paradigms. Collaborative fog computing involves multiple organizations pooling resources and sharing capabilities to create a federated fog computing ecosystem. Application-aware fog computing tailors the architecture to specific application needs, optimizing resource allocation and performance.

Looking to the future, fog computing is set to witness exciting advancements. The integration of artificial intelligence and machine learning at the edge enables intelligent decision-making and adaptive systems. Blockchain integration enhances trust, data integrity, and secure communication in fog computing architectures. The integration of 5G networks with fog computing opens up opportunities for latency-sensitive applications. Edge-to-edge collaboration enables efficient resource utilization and fault tolerance. Privacy-preserving techniques ensure data privacy while enabling valuable data analysis. These trends and innovations will shape the future of fog computing, expanding its capabilities and applications.

In conclusion, fog computing architecture brings processing power and data analysis capabilities closer to the edge devices, enabling real-time analytics, reduced latency, improved network efficiency, and enhanced security and privacy. With its applications spanning across industries such as smart cities, healthcare, manufacturing, and transportation, fog computing is revolutionizing how organizations operate and deliver services. However, challenges such as network connectivity, resource constraints, security risks, and standardization need to be addressed for widespread adoption. By carefully implementing fog computing strategies and staying abreast of future trends and innovations, organizations can harness the power of fog computing and unlock its full potential in the ever-evolving landscape of technology.